Recently I have been binge-listening to a lot of Security Now podcasts, all while waiting for the new episode to come out each week.

Steve Gibson publishes on grc.com transcripts for each episode, not only in html but also in plain text.

I thought about the information that could be extracted from all that data, and the first simple and quick thing that occurred to me was to make one of those cool word clouds (also known as tag clouds for its original usages).

Finding a library

I didn’t want to implement a word cloud generator, and I figured that at this point there would be more than one available. I decided I would do all the work in Python since it’s an awesome tool for this kinds of things, and I also could use some practice with it.

After a quick search I found word_cloud. Looking at its docs I saw it provided the function generate_from_frequencies(frequencies), which:

Create[s] a word_cloud from words and frequencies.

Parameters: frequencies : array of tuples

Exactly what I needed. Also, the sample results looked nice, so this library was a quick sell.

This library also offers functions to process and filter text, but I did wanted to implement those functions by myself, limiting its use to the cloud generation itself. That way if I wanted to generate clouds using another method, even if it didn’t offer those extra functions, it would be easy to modify.

Getting the transcripts

Of course for this the best tool was good old wget.

Luckily, Mr. Gibson is very tidy, and the URLs for everything are uniform. This is what they look like for the plain-text transcripts: https://www.grc.com/sn/sn-001.txt. The only thing that we need to take care of is the zero padding at the beginning of the episode number (which is easily done).

#!/bin/bash

LAST_EPISODE=520 # Episode 520 - August 11th 2015

E=1

until [ "$E" -gt "$LAST_EPISODE" ]; do

PADDED_NUM=`printf %03d $E`

wget --no-clobber --wait=3s --random-wait \

https://www.grc.com/sn/sn-"$PADDED_NUM".txt

((E=E+1))

done

It’s important to always set a sensible wait interval to be a polite visitor. Also the option --no-clobber makes sure that we don’t download a file multiple times.

Parsing the data

Now that we have a directory with all .txt transcripts, it’s time to get all the words and count them.

For this I started by declaring word_count = dict(), a dictionary from strings to integers to hold words and the number of times they appear in all transcripts.

Then, reading file by file, and in each file line by line, the first thing I did was filter for punctuation = "!()-[]{};:\",<>./?@#$%^&*_~". I decided not to consider the apostrophe (‘) at this point, since that would mess with contraptions or possessive pronouns (for example “don’t” would become “don t” and “their’s” “their s” ). To do this filtering I used the string.translate() function.

Another filtering step I made was to convert everything to lowercase. That way it would be simple to check if a word was already added to the dictionary.

Counting the words

The loop ends up looking like this:

"for each file f"

"for each line l in f"

# Get rid of punctuation:

line = line.translate(None, punctuation)

# Split line into words:

words = line.split()

for word in words:

# Normalizing to lower case:

word = word.lower()

if word not in word_count:

word_count[word] = 1

else:

word_count[word] += 1

All this dictionary is then dumped into a .csv file—formatted as word,#appearances—with words in an arbitrary order.

This script, count.py counted 6202748 words in all transcripts, of which 61917 were unique.

Getting the most-used words

For this we need to read the previously generated file into a list of tuples (word, count) and sort descending by the second component. From there we can get the largest n tuples and then feed them directly to word_cloud.

But there is a problem

It turns out that most words that are on the top of the list are basic words like “the”, “a”, “to”… that is too obvious, and not that interesting.

Here’s the actual output of the top 10:

$ head output.csv

the,248075

and,175029

to,169788

that,139768

a,138489

i,124485

of,119244

it,115041

is,102048

you,99498

So how can we filter the most-used words that are meaningless to get the most-used words that are meaningful?

It turns out, there is a thing called stop words that are used in database queries to filter exactly that: words that do not provide much meaning.

After a quick search I found a couple of stop-word lists in English. I ended up using the largest one, which had 529 words in total. It can be found over here.

In order to to implement that, I read all that list into stop_words = set(), and then check if words are in the set before adding them to the list of tuples to be sorted.

At that same step I ended up having to add another filter to prevent non-printable characters from getting to the top list. I decided to leave that filter there and not in the count script to avoid checking all words character by character, and instead do it for words that are candidates for being at the top. That filter is implemented by the function filter.

stop_words = set()

#read all stop_words and add each of them to the set

# Read the whole list of words and add them to a list:

words_freq = []

with open("output.csv", "r") as f: # word, appearances

for line in f:

line = line.strip()

line = line.split(',')

# Filtering non-printable characters:

word = filter(lambda x: x in string.printable, line[0])

if word not in stop_words and '\'' not in word:

t = (word, int(line[1]))

words_freq.append(t)

# Sorting ascending by number of appearances:

words_freq.sort(key=lambda t: t[1], reverse=True)

# Get the n first elements from words_freq and throw away the rest.

Generating the cloud

After all that, it is just a matter of feeding that list of n tuples, words_freq, to word_cloud.

w = WordCloud(width=1920, height=1080, max_words=len(words_freq)).generate_from_frequencies(words_freq)

Word_cloud does it magic for a while, and then we can easily export it to an image:

w.to_file("cloud.png")

Result

Here’s the word cloud containing the top 1000 words in Security Now episodes from 1 to 520:

Extra

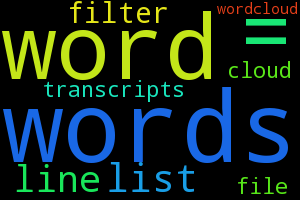

To get a little bit meta, here is a word cloud of the top 10 words in this post. (Yep, this last sentence is included).